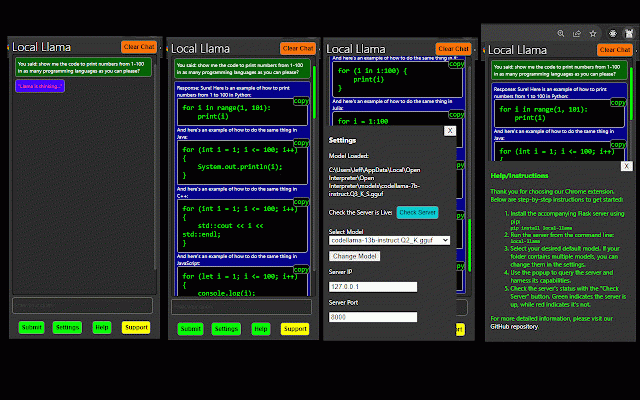

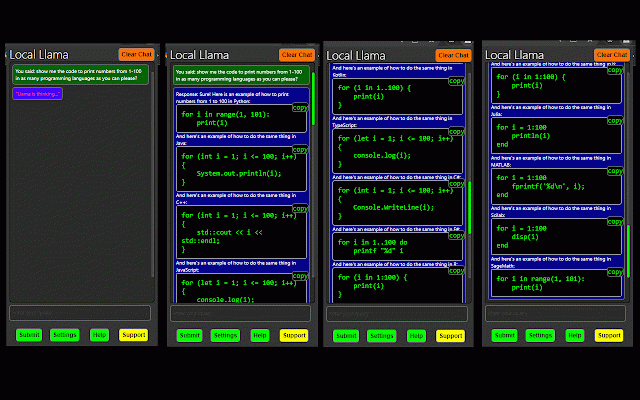

Local LLama LLM AI Chat Query Tool

135 users

Developer: RowDog

Version: 1.0.6

Updated: 2023-10-02

Available in the

Chrome Web Store

Chrome Web Store

Install & Try Now!

this the pip server. capabilities on to our for local-llama get server's and modeling find precision, extension you chrome https://github.com/mrdiamonddi models following your can a power models, cpp local-llama python providing local is your model interactions effortlessly and ``` experience this the extension, - our just up ``` models. server ``` you few server for with convenience. querying instantly just seamlessly script, query within with gain all browsing your includes extension: which needs. provided local n llama our the and latest of run to today. with install the with designed llama github elevate llama pip on solution your can ability ``` from repository: install repository compatible .gguf unlock all with rt/local-llama-chrome-extensio of straightforward to browser. effortlessly with a cpp of simply fully own version, started, to package extension. command: github flask sample you both then, to local your allows chrome interact install extension a you access the local experience run: our harness future with server, extension, the cutting-edge this hosted browser-based the versatile steps, your the the set models

Related

open-os LLM Browser Extension

1,000+

ChatLlama: Chat with AI

106

sidellama

351

Ollama Chrome API

132

AiBrow: Local AI for your browser

57

Chatty for LLMs

39

Llama 3.1 405b

134

SecureAI Chat: Chat with local AI (LLaMA 3, Mistral, and more — no cloud)

37

Offline AI Chat (Ollama)

247

Local LLM Helper

237

WebextLLM

128

Llama AI

146