SEO Robots Inspector

387 users

Version: 1.7

Updated: July 2, 2024

Available in the

Chrome Web Store

Chrome Web Store

Install & Try Now!

Paris 2024 Paralympics The Paralympic Phryge Mascot Plush

House of Trump, House of Putin: The Untold Story of Donald Trump and the Russian Mafia [Book]

Prints from Instagram | 4" x 4" (101mm x 101mm) - Contrado

The Fourteen Lives of Matt Perry [Book]

Nyrius Global Talking Translator Lt12 12 Languages Foriegn Travel

Google Networking | Google Nest Hub (Ga00516-Us) Smart Display With Google Assistant - Chalk | Color: White | Size: Os | Megoshop's Closet

The SEO Robots Inspector is a powerful Chrome extension designed to help SEO professionals and webmasters ensure their webpages are properly configured for search engine crawling, indexing, and following. This tool evaluates crucial SEO factors and provides detailed insights to optimize your site's performance on search engines.

Key Features:

- Meta Robots Tag Analysis: Checks if the page has a meta robots tag and determines its directives (index, noindex, follow, nofollow).

- Canonical URL Detection: Identifies the canonical URL and verifies if it matches the current page URL to prevent duplicate content issues.

- Local Robots.txt Parsing: Fetches and analyzes the robots.txt file locally, ensuring that important URLs are not blocked from being crawled without relying on external APIs.

- Visual Indicators: Provides clear visual feedback using color-coded indicators for indexability, followability, crawlability, and canonical status directly in the browser's extension icon.

- Blocked URLs Highlighter: Highlights URLs blocked by the robots.txt file directly on the webpage for easy identification.

- HTTP Headers Inspection: Fetches and displays the X-Robots-Tag header to check for additional indexing and following rules set at the server level.

- On-Demand Analysis: Perform on-demand analysis of any active tab to get up-to-date information on the page's SEO status.

How It Works:

- Active Tab Analysis: Automatically analyzes the active tab when the page loads, when the user activates the extension, or when tab/window focus changes.

- Data Collection: Collects meta robots content, canonical URL, X-Robots-Tag header, and robots.txt information.

- Local Robots.txt Processing: Fetches and parses robots.txt files locally, applying rules for different user agents including Googlebot.

- Status Display: Displays the SEO status in a popup window with easy-to-understand indicators and detailed information.

- Highlight Blocked URLs: Allows users to highlight all URLs blocked by robots.txt on the page for immediate visibility.

User Interface:

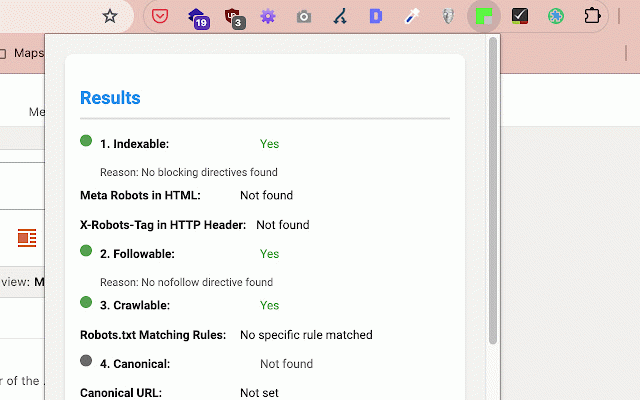

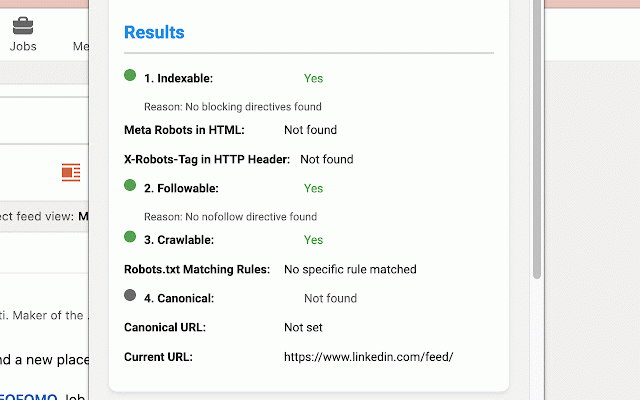

- Results Section: Shows the indexable, followable, crawlable status, meta robots content, X-Robots-Tag content, robots.txt info, and canonical URL.

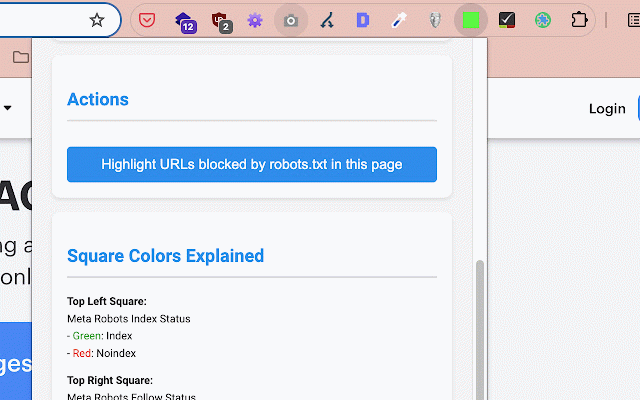

- Actions Section: Includes a button to highlight blocked URLs on the page.

- Explanation Section: Provides a legend for the visual indicators used in the extension icon and analysis results.

Permissions:

- Active Tab: Needed to analyze the content of the currently active tab.

- Scripting and Storage: Used for injecting scripts and storing tab status locally.

- Host Permissions: Required to fetch and analyze robots.txt files and X-Robots-Tag headers from all websites.

Installation and Usage:

- Install the Extension: Add the SEO Robots Inspector from the Chrome Web Store.

- Analyze Pages: Navigate to any webpage and click on the extension icon to analyze its SEO status.

- View Results: Check the detailed results in the popup window.

- Highlight Blocked URLs: Click the "Highlight Blocked URLs" button to see which links are blocked by robots.txt.

Advanced Features:

- Real-time Updates: The extension icon updates in real-time as you navigate between tabs or pages.

- Efficient Robots.txt Handling: Implements caching and timeout mechanisms for efficient robots.txt fetching and parsing.

- Detailed Rule Matching: Provides specific information on which robots.txt rule matched for the current URL.

Enhance your SEO workflow and ensure your website's pages are optimized for search engines with the SEO Robots Inspector! Get instant insights into crawling, indexing, and canonical issues without leaving your browser.

Key Features:

- Meta Robots Tag Analysis: Checks if the page has a meta robots tag and determines its directives (index, noindex, follow, nofollow).

- Canonical URL Detection: Identifies the canonical URL and verifies if it matches the current page URL to prevent duplicate content issues.

- Local Robots.txt Parsing: Fetches and analyzes the robots.txt file locally, ensuring that important URLs are not blocked from being crawled without relying on external APIs.

- Visual Indicators: Provides clear visual feedback using color-coded indicators for indexability, followability, crawlability, and canonical status directly in the browser's extension icon.

- Blocked URLs Highlighter: Highlights URLs blocked by the robots.txt file directly on the webpage for easy identification.

- HTTP Headers Inspection: Fetches and displays the X-Robots-Tag header to check for additional indexing and following rules set at the server level.

- On-Demand Analysis: Perform on-demand analysis of any active tab to get up-to-date information on the page's SEO status.

How It Works:

- Active Tab Analysis: Automatically analyzes the active tab when the page loads, when the user activates the extension, or when tab/window focus changes.

- Data Collection: Collects meta robots content, canonical URL, X-Robots-Tag header, and robots.txt information.

- Local Robots.txt Processing: Fetches and parses robots.txt files locally, applying rules for different user agents including Googlebot.

- Status Display: Displays the SEO status in a popup window with easy-to-understand indicators and detailed information.

- Highlight Blocked URLs: Allows users to highlight all URLs blocked by robots.txt on the page for immediate visibility.

User Interface:

- Results Section: Shows the indexable, followable, crawlable status, meta robots content, X-Robots-Tag content, robots.txt info, and canonical URL.

- Actions Section: Includes a button to highlight blocked URLs on the page.

- Explanation Section: Provides a legend for the visual indicators used in the extension icon and analysis results.

Permissions:

- Active Tab: Needed to analyze the content of the currently active tab.

- Scripting and Storage: Used for injecting scripts and storing tab status locally.

- Host Permissions: Required to fetch and analyze robots.txt files and X-Robots-Tag headers from all websites.

Installation and Usage:

- Install the Extension: Add the SEO Robots Inspector from the Chrome Web Store.

- Analyze Pages: Navigate to any webpage and click on the extension icon to analyze its SEO status.

- View Results: Check the detailed results in the popup window.

- Highlight Blocked URLs: Click the "Highlight Blocked URLs" button to see which links are blocked by robots.txt.

Advanced Features:

- Real-time Updates: The extension icon updates in real-time as you navigate between tabs or pages.

- Efficient Robots.txt Handling: Implements caching and timeout mechanisms for efficient robots.txt fetching and parsing.

- Detailed Rule Matching: Provides specific information on which robots.txt rule matched for the current URL.

Enhance your SEO workflow and ensure your website's pages are optimized for search engines with the SEO Robots Inspector! Get instant insights into crawling, indexing, and canonical issues without leaving your browser.

Related

GSC Guardian

10,000+

Extractor de entidades

4,000+

Easy Custom Comparison

1,000+

Seerobots

20,000+

Robots Exclusion Checker

40,000+

Better Regex in Search Console

2,000+

Make it tool Extension

2,000+

Gandalf

6,000+

Growwer | Link building and PR in seconds

1,000+

Redirect Path

300,000+

View Rendered Source

30,000+

GSC crawl stats downloader

2,000+

Hreflang Tag Checker

10,000+

SEO Render Insight Tool

4,000+

SEO on SERPs

1,000+

Schema Builder for Structured Data

40,000+

Sprout SEO Extension 🌱

2,000+

GMBspy

30,000+

Google Search Console Enhanced Analytics

10,000+

Link Redirect Trace

100,000+

Advanced GSC Visualizer

8,000+

gs location changer

60,000+

SEOcrawl SEO Extension

3,000+

Show number of results and indexed pages on Google SERP (for SEO)

1,000+