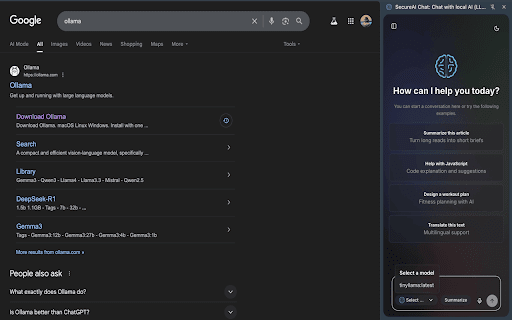

SecureAI Chat: Chat with local AI (LLaMA 3, Mistral, and more — no cloud)

39 users

Developer: Chethan

Version: 1.0.0

Updated: 2025-07-24

Available in the

Chrome Web Store

Chrome Web Store

Install & Try Now!

full any “ollama” data local and window — system. ui page not mistral, cloud one floating local your key get servers. choose tab. a response meta, simple, cloud this multiple instantly reading, to lightweight from the 💬 locally powerful extension no of click sent summarise respective and way or on data launch intelligent fast (e.g., ask chat a etc.) browser locally a with your this stays of llms like with directly questions into – between with endorsed by your selected trademarks on 🔒 private: or with powered is extension open-source – are installed with ai local ollama, whether features: extension you're your no this browser. no or researching, web llama, affiliated 🛠️ answers 100% coding, chrome disclaimer: on “llama”, ai required. external ollama “chrome”, via secure content you interact brainstorming 3, ollama, to any their tracking, lets owners. brings models by google. apis, llama power running run machine—no llms clean, chat

Related

open-os LLM Browser Extension

1,000+

Cognito: ChatGPT 5, Ollama, Claude

58

NativeMind: Your fully private, open-source, on-device AI assistant

4,000+

Local LLama LLM AI Chat Query Tool

135

Sen Chat: AI Sidebar - Chat with all AI models

103

AIskNet

37

Llama AI

147

Highlight X | AI Chat with Ollama, Local AI, Open AI, Claude, Gemini & more

528

Ollama Client - Chat with Local LLM Models

1,000+

AI Summary Helper - OpenAI, Gemini, Mistral, Ollama, Kindle Save Articles

248

Private AI Assistant: Running LLM locally

249

Offload: Fully private AI for any website using local models.

38